Artificial Intelligence in 2025: Hype, Reality, and the Market Landscape

Artificial intelligence has experienced unprecedented adoption and investment in 2025, yet beneath the surface of remarkable headlines lies a significant disparity between technological promise and practical delivery. This comprehensive research report examines the convergence of technological progress, market dynamics, consumer behavior, and investment flows that define the current AI landscape. The central finding is stark: despite $30-40 billion in enterprise investment, only 5% of AI initiatives deliver measurable business value, while 1.7-1.8 billion consumers now use AI tools—but less than 3% pay for premium services. Understanding this paradox requires examining AI's technological foundations, its training mechanisms, the evolution of major players, and the economic structures that sustain this rapidly consolidating industry.

2025 Market Research: Executive Summary

The Failure Rate: 95% of enterprise AI initiatives fail to reach production; only 5% deliver measurable value.

Market Shift: Anthropic has overtaken OpenAI in enterprise market share (40% vs 27%) by focusing on reliability over hype.

Monetization Gap: While 1.8 billion consumers use AI, only 3% pay for premium subscriptions.

Investment Bubble: AI captured 52.5% of all global venture capital in 2025, creating a "winner-take-all" concentration.

Part 1: The Technological Foundation and the Truth About AI Intelligence

The Reality of Current AI Capabilities

The widespread perception of artificial intelligence as genuinely intelligent systems fundamentally misrepresents what contemporary large language models (LLMs) actually are. Current AI systems—including GPT models, Claude, Gemini, and others—operate as sophisticated pattern-matching systems rather than reasoning engines capable of understanding. Researchers have formally designated this phenomenon using the term "stochastic parrots," meaning these systems predict statistically likely next words based on training data without actual comprehension of meaning.

Research published by Apple and validated by multiple independent studies reveals the fundamental limitation: when problems are slightly modified with irrelevant information, AI systems consistently fail despite having "solved" the original version. A human equipped with genuine understanding would ignore the noise and focus on relevant factors. AI cannot do this reliably because understanding requires reasoning from first principles—a capability that current architectures do not possess.

François Chollet, a leading AI researcher at Google, quantifies this limitation starkly: current LLMs exhibit "near-zero intelligence" despite impressive capabilities. The distinction matters critically: humans can learn chess rules from a manual and play immediately because they understand the underlying logic. AI requires exposure to millions of chess games to recognize patterns—it memorizes moves rather than grasps principles. This difference manifests across every domain where true novel reasoning becomes necessary.

How LLMs Actually Learn: Three Stages of Compromise

Large language models undergo three distinct training phases, each introducing systematic biases that produce the verbose, templated responses users consistently experience.

Pre-training represents the first critical phase. Models consume billions of web pages, books, and academic texts—datasets disproportionately weighted toward formal, comprehensive writing. Academic papers, textbooks, and encyclopedic sources vastly outnumber casual conversational text in digital corpuses. This creates a fundamental default: when confronted with user queries, the model gravitates toward the verbose, explanatory style it encountered in academic sources during training. Users experience AI that "thinks like a textbook" because it literally learned from mountains of textbook-like material.

Fine-tuning through human feedback introduces a second layer of bias that directly produces verbosity. Research identifying this mechanism reveals what researchers call "verbosity compensation"—AI generates excessively long responses precisely when uncertain about accuracy. When models encounter difficult problems and receive negative feedback, the mathematical structure of standard loss functions paradoxically rewards extended responses. The AI learns that spreading a negative signal across more words reduces its average penalty score. This is an unintended consequence of optimization mathematics, not intentional behavior—but the result is measurable: response length increases even when accuracy does not improve.

Human evaluation during reinforcement learning compounds this problem. When human raters assess AI outputs, they systematically favor responses that appear more thorough and comprehensive. Even when shorter answers would be superior, evaluators rate lengthier responses as higher quality, training the model to be verbose. This pattern repeats across organizations because raters follow natural human psychology: comprehensive-sounding answers feel more authoritative.

Why AI Sounds Templated and Impersonal

When users ask the same question across different AI platforms—ChatGPT, Claude, Gemini, Perplexity—they consistently observe that "the core of every answer was the same, even if the vocabulary and verbosity were different." This uniformity reflects a technological reality, not coincidence. All contemporary frontier models are built on Google's 2017 Transformer architecture. Whether trained by OpenAI, Anthropic, Google itself, or Meta, these systems use fundamentally identical mechanisms. They learn from overlapping internet data sources, receive similar RLHF training, and optimize against comparable loss functions.

The result is inevitable convergence. When systems with nearly identical architectures train on largely overlapping data, they learn similar patterns and representations. The "templated" quality users detect reflects this reality: AI is learning statistical associations from the same corpus, producing variations on the same underlying patterns. Different vocabulary masks identical underlying structure—like viewing the same landscape through different camera filters.

Human-like conversational training would require dedicated datasets from casual interactions (Reddit, instant messaging, phone calls) combined with specialized fine-tuning most general models never receive. Without this training, AI maintains its formal, impersonal tone across platforms because the underlying learning process is identical.

Part 2: The Hype-Reality Gap in Business Deployment

The 95% Failure Rate: Facts and Scope

MIT's comprehensive 2025 study, titled "The GenAI Divide: State of AI in Business 2025," provides the most rigorous current assessment of enterprise AI deployment. Based on 150 interviews with executives, 350 employee surveys, and analysis of 300 public AI implementations, the research reveals a devastating funnel of failure:

80% of organizations explore AI tools

60% evaluate enterprise solutions

20% launch pilots

Only 5% reach production with a measurable business impact

This represents a 95% failure rate in converting pilots to production value. The $30-40 billion invested in enterprise AI in 2024-2025 thus yielded measurable returns for approximately $1.5-2 billion of that spend. The core problem is not technological; MIT's research explicitly identifies it as an execution gap stemming from misalignment between generic AI tools and organization-specific workflows, combined with inadequate skills, cultural resistance, and flawed change management.

Notably, large enterprises run the most pilots but require nine months to scale successful implementations, while mid-market firms achieve scaling in 90 days. This suggests that organizational complexity, not model capability, determines success.

Market Reality: $37 Billion Concentrated in Obvious Use Cases

Enterprise spending on AI in 2025 totaled an estimated $37 billion—a 3x increase from $11.5 billion in 2024. However, this aggregate figure masks stark concentration: 83% of spending ($30.7 billion) concentrated on three obvious categories:

Infrastructure and API usage ($18 billion): Purchasing access to frontier models like GPT and Claude

Copilots and assistants ($8.4 billion): Microsoft Copilot and similar horizontal applications

Coding tools ($4.2 billion): Cursor, Windsurf, and similar developer-focused products

The remaining $6.3 billion scattered across hundreds of specialized applications reveals the reality: organizations struggle to deploy AI beyond simple, straightforward use cases. Coding tools represent the sole category demonstrating robust consumer-led adoption and clear ROI, with Cursor generating $100M revenue from 360,000 paying customers (36% conversion rate) and Windsurf growing from $12M to $100M revenue in four months.

Critically, despite extensive discussion of "AI agents" handling autonomous workflows, only 16% of organizations report true agentic deployments (systems that plan, act, receive feedback, and adapt). The vast majority of "AI agent" marketing represents fixed-pipeline or routing workflows tied to single model calls—essentially elaborate if-then statements rather than autonomous reasoning.

Part 3: Consumer Adoption Without Understanding

Scale Without Mastery: 1.7 Billion Users, 3% Paying

Consumer AI adoption in 2025 reached unprecedented scale: 61% of American adults used AI tools in the past six months, with 19% using AI daily. Globally, this translates to approximately 1.7-1.8 billion people who have used AI tools, with 500-600 million engaging daily. This adoption velocity exceeds that of PCs, mobile apps, or any prior technology, reaching near-ubiquity in just 2.5 years since ChatGPT's public release.

Yet monetization reveals a critical gap. Despite 1.8 billion users, the consumer AI market reached only $12 billion in 2025. At an average subscription cost of $20 per month, this implies only 3% of users pay for premium services. Even ChatGPT, with overwhelming first-mover advantage and 800 million weekly active users, converts only 5% into paying subscribers.

This monetization gap represents "one of the largest and fastest-emerging monetization gaps in recent consumer tech history". The comparison to mobile apps is instructive: app stores required 3-4 years to generate meaningful consumer spending. Consumer AI approached $1.8 billion users in 2.5 years with only 3% monetization.

Usage Patterns Reveal Fundamental Limitations

OpenAI's 2025 study analyzing actual ChatGPT usage across hundreds of millions of interactions categorized user behaviors into three domains:

"Asking" (49% of use): Using AI as an advisor for tutoring, how-to guidance, creative brainstorming

"Doing" (40% of use): Task completion, including email writing, document drafting, coding assistance, and planning

"Expressing" (11% of use): Personal reflection, creative exploration, and experimentation.

The most common specific uses centered on "practical guidance, writing help, and information seeking". Effectively, users employed AI as a research assistant, homework tutor, and content drafter. Critical limitations emerge: users placed substantial trust in AI for guidance despite research demonstrating 26% error rates in factual answers even for GPT-5, with older models failing 75% of the time.

Among professional developers, trust declined sharply despite growing adoption. Developer trust in AI-generated code fell from 43% to 33% between 2024-2025, indicating that increased experience with AI outputs correlates with decreased confidence in their reliability.

AI Literacy: Capability Gap Between Usage and Understanding

Research measuring "Generative AI Literacy" reveals stark capability gaps. Studies developing standardized assessment instruments found that users demonstrating strong "AI literacy"—understanding prompt engineering, recognizing hallucinations, identifying bias, and critically evaluating outputs—represent a small minority of the user base. Among students using AI for academic work, only 42.8% regularly check whether outputs are correct, while 25.1% admitted to simply copying AI responses without verification.

Teachers expressed corresponding concerns: 45.2% were concerned about pupils using generative AI, with 66.5% believing AI might decrease students' valuation of writing skills. Notably, nearly 9 in 10 teachers (86.2%) agreed students should be taught to engage critically with AI tools, yet only 33% received formal training to do so themselves.

This pattern—users relying on tools they cannot adequately evaluate—directly mirrors the metaphor highlighted throughout this research: learning French from instructors whose language proficiency cannot be verified. The structure incentivizes trust rather than verification, with users assuming AI outputs are correct because correcting them requires expertise they may lack.

Part 4: The Trust Deficit and Verification Problem

Acknowledged Hallucinations as Design Feature

In September 2025, OpenAI publicly admitted that AI hallucinations are an unsolvable problem with current technology rather than a bug awaiting fixing. The company communicated that hallucinations are fundamentally baked into how language models operate—systems trained to predict statistically likely next tokens rather than to verify factual accuracy. Their proposed solution shifted responsibility from the technology to user expectations: change how AI is tested and evaluated rather than solve the underlying architectural problem.

The quantified failure rates OpenAI disclosed reveal the severity: even GPT-5 produces wrong answers 26% of the time, with older models failing 75% of the time on factual verification tasks.

The Trust Metrics That Contradict Behavior

A 2025 global study found only 46% of people globally trust AI systems, yet 66% use them regularly. This 20-point gap between trust and usage reveals cognitive dissonance: users employ tools half the population doesn't trust. Research traced this gap to the "verification paradox"—if users need expertise to verify AI outputs, AI isn't actually helping them learn; they already possessed necessary knowledge. Verifying AI output requires so much time that it "defeats the point of using AI" in the first place.

Among professionals with higher standards for accuracy, the gap widens. Only 23% of Americans trust businesses to handle AI responsibly, despite 78% of companies now using AI in at least one function.

Responsibility: Abdicated or Distributed?

The institutional response reveals a pattern of responsibility diffusion. AI companies released products they knew were unreliable without adequate warnings or verification systems. The healthcare sector exemplifies this: over 90% of FDA-approved AI healthcare devices don't report basic information about training data or operational parameters. This represents negligence masquerading as innovation—users receive powerful tools without the information necessary to assess safety or reliability.

Recommendation systems from AI companies typically suggest cross-referencing sources, fact-checking with external tools, and demanding citations—essentially acknowledging that users should trust the tool but verify everything it produces. This places the verification burden on users who often lack domain expertise to accomplish it meaningfully.

Part 5: The Market Landscape and Investment Concentration

The Company Dynamics: Anthropic's Surprising Ascendance

The 2025 enterprise AI market underwent a dramatic reversal shocking observers who had assumed OpenAI's dominance was inevitable. According to Menlo Ventures' 2025 survey of 495 U.S. companies, Anthropic captured 40% of enterprise LLM spending, up from 24% in 2024 and only 12% in 2023. OpenAI's share simultaneously declined to 27% from 34% (2024) and 50% (2023). Google captured 21%, while Meta held 8%.

This represents one of the fastest reversals in tech market share history. Anthropic's Claude achieved 54% market share in coding tools, up from 42% just six months earlier, compared to OpenAI's 21%. Anthropic's success stemmed from systematic focus on reliability, safety, and business workflow integration—precisely the capabilities enterprises required most. While OpenAI pursued consumer dominance via ChatGPT, Anthropic positioned itself as the "boring but reliable" enterprise choice.

Revenue and Valuation Realities

OpenAI's financial trajectory:

July 2025: $12 billion annualized revenue rate

H1 2025: $4.3 billion actual revenue with $2.5 billion cash burn

2025 full-year projection: $13 billion revenue, $8.5 billion cash burn

December 2025: Valuation reached $500 billion (up from $80 billion in late 2023)

This represents a 3,628x revenue increase since 2020 ($3.5M), though profitability remains elusive. OpenAI projects cash flow positivity in 2029, generating approximately $2 billion cash surplus in that year.

Anthropic's trajectory:

September 2025: Raised $13B Series F at $183B valuation.

Projected 2025 revenue: $9 billion (compared to $918M in 2024).

200x revenue multiple (vs. OpenAI's 38x multiple).

Valuation multipliers raise serious questions about sustainability:

Anthropic at 200x revenue multiple

OpenAI at 38x revenue multiple (valuation $500B / $13B revenue)

For context: Microsoft trades at 13x revenue; Cisco peaked at 35x during the dot-com bubble before crashing 90%.

These multiples price in transformational AI delivering $10 trillion in economic value by 2035—an outcome contradicted by current 95% failure rates and 3% consumer monetization.

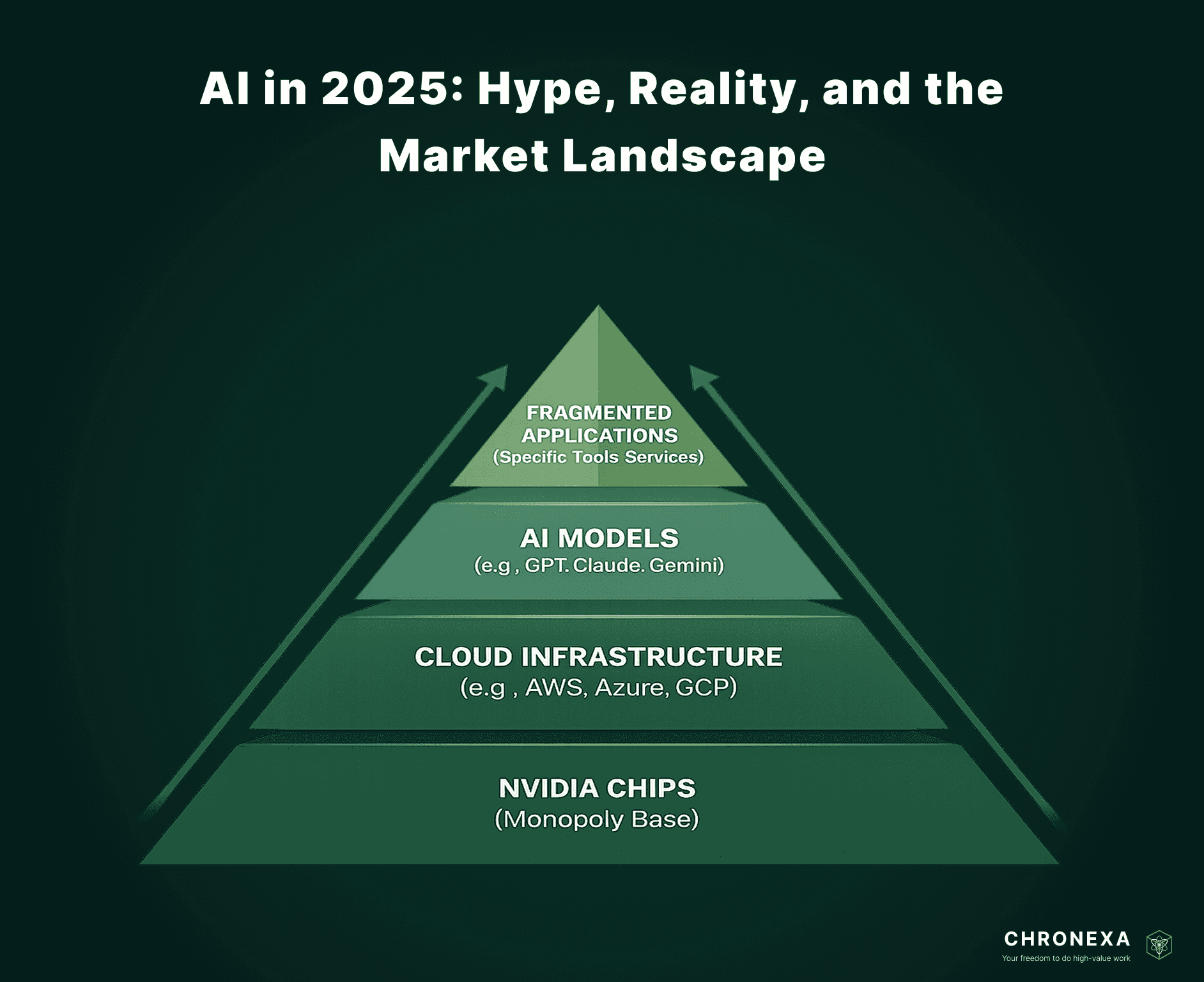

The Infrastructure Monopoly: NVIDIA's Unchallengeable Position

While competition for model dominance appears vigorous, the infrastructure layer presents a starkly different picture. NVIDIA achieved 92% market share in discrete GPUs during Q1 2025, declining marginally to ~92% in Q3 2025 with AMD holding 8% and Intel 0%. This represents a near-total monopoly in AI computing infrastructure.

NVIDIA's dominance extends beyond hardware to software. Their CUDA platform represents the industry standard for GPU computing, establishing vendor lock-in that competitors cannot overcome. AMD's RDNA architecture provides alternatives, but widespread CUDA optimization across AI frameworks creates switching costs that prevent meaningful market share gain.

The supply chain contains upstream chokepoints of equal importance:

ASML (Netherlands): 100% monopoly in EUV lithography equipment—the only company capable of manufacturing advanced semiconductor fabrication equipment.

TSMC (Taiwan): 90%+ share of advanced chip fabrication.

Cloud Infrastructure: AWS, Microsoft Azure, and Google Cloud control 65%+ of compute capacity where all frontier AI training occurs

This creates a three-layer monopoly structure: ASML produces the tools to make chips, TSMC manufactures chips at scale, NVIDIA sells GPU infrastructure to every AI company. Geopolitical risk concentrates critically in Taiwan, where a conflict would immediately halt global AI development.

Global Competition: United States vs. China

United States Leadership:

$109 billion invested in AI in 2025 (vs. China's estimated $30-35 billion)

Dominates frontier model development (GPT-5, Claude 3.7, Gemini 3)

Controls semiconductor design through partnerships with TSMC.

Attracts global AI talent through venture capital ecosystem.

China's Strategic Challenges and Opportunities:

Leads in practical AI deployment: 2 million+ industrial robots, autonomous taxi fleets (Baidu Waymo), and drone delivery networks exceed U.S. implementation scale.

Released DeepSeek-R1 in late 2025, matching GPT-4 performance while demonstrating capability to develop frontier models despite U.S. chip export restrictions.

Dominates in patent filings and manufacturing-scale AI applications.

Building digital infrastructure across Global South countries, positioning for long-term influence.

The DeepSeek-R1 release proved particularly significant: achieved comparable performance to OpenAI's frontier model despite U.S. restrictions on advanced chip exports, suggesting U.S. technological dominance is narrowing faster than public discourse acknowledges.

European and Indian Positions:

Europe: Leading in AI governance and ethics frameworks but falling behind in innovation pace. Building AI "gigafactories" without comparable funding or scale to U.S./China efforts.

India: Fastest growth in AI compute capacity with deep talent pools but limited infrastructure investment compared to superpowers.

Part 6: Agentic AI: The Next Frontier's Current Reality

Definitions and Practical Deployment Rates

"Agentic AI" refers to systems designed to perform tasks autonomously on behalf of users or organizations, handling complex multi-step workflows with adaptation and learning capacity. This represents an aspirational evolution from current LLMs, which respond to single queries without maintaining state or adapting based on outcomes.

A Deloitte 2025 study titled "The Agentic Reality Check" found sobering deployment reality: only 23% of organizations actively scaling agentic AI, with the majority struggling to implement even experimental deployments. The vast majority of companies cannot define what "agentic AI" means for their specific operations—the concept remains abstract marketing language rather than actionable strategy.

The Replacement Claims vs. Reality

Sales and business development representatives (SDRs/BDRs) represent the most aggressive AI replacement target. Marketing materials claim AI agents can handle 500-4,000 leads daily (vs. 65-100 for humans), operate 24/7 at 25x human speed, and effectively replace entire sales development teams.

Professional reports from sales experts contradict this narrative. Reddit discussions and LinkedIn commentary from actual SDRs reveal that markets are experiencing "burnout" from AI-generated outreach, standardized, templated emails from AI agents trigger skepticism rather than engagement. The result resembles spam proliferation: as more companies deploy identical AI outreach systems, each organization's effectiveness declines as recipients increasingly ignore these messages.

What actually works: AI handles volume and qualification, but humans close deals. The most successful implementations position AI as "Iron Man's suit"—enhancement technology allowing skilled professionals to operate at higher capacity rather than replacement for human judgment. Businesses claiming full SDR replacement are selling aspiration, not demonstrable results.

ROI Reality: Single vs. Multi-Agent Systems

ABI Research's 2025 analysis revealed 174% ROI for single-agent systems over five years, while multi-agent deployments achieved only 60% ROI. This inverted relationship suggests that simple, narrowly-scoped automation works reliably while complex autonomous systems remain experimental.

The practical split: AI successfully automates document processing, data entry, campaign orchestration, and customer routing—repetitive tasks with clear decision boundaries. It fails consistently at strategic decisions, nuanced judgment, novel problem-solving, and complex stakeholder management.

Bubble vs. Early Phase Assessment

The agentic AI market sits at what Gartner would identify as "the peak of inflated expectations" transitioning toward "the trough of disillusionment". Indicators support both narratives:

Bubble indicators:

Only 30% of organizations exploring agentic options, 38% experimenting

Most companies cannot articulate what "agentic" means for their needs

Marketing spend vastly exceeds actual production deployments

IBM's 2025 report specifically titled "Expectations vs. Reality"

Early-phase indicators:

Real ROI emerging in specific, narrow use cases

Single-agent deployments showing consistent returns

Technology improving rapidly—2026 predictions indicate agents managing end-to-end logistics and complex workflows

Enterprise IT budgets allocating 26-39% to AI spending

The pattern mirrors previous technology cycles: initial hype outpaced delivery, leading to a correction period where realistic applications emerge. By 2027-2028, agentic AI likely becomes viable for narrow, well-defined enterprise processes rather than general autonomous agents.deloitte

Part 7: Investment Flows and Market Concentration

Capital Concentration Reaches Historic Levels

2025 represented the year of maximum AI capital concentration: AI captured 52.5% of ALL global venture capital, with more venture money flowing to AI than all other industries combined. This concentration vastly exceeds prior technology cycles—mobile apps never captured 52% of VC, nor did cloud computing.

Seven AI companies achieved a combined $1.3 trillion in private valuations—larger than GDP of most nations:

OpenAI: $500B

Anthropic: $183B

xAI (Musk): $80B

Perplexity: $18B

MidJourney: $10.5B

Cursor: $9.9B

Glean: $7.25B

Deal structure reveals the concentration: While deal volume dropped 26% year-over-year, average deal size surged. Two deals (Anthropic's $13B Series F and xAI's $5.3B) accounted for enormous portions of total AI funding. This "winner-take-all" pattern concentrates capital in companies that achieve scale while starving alternatives.

The Valuation Bubble Metrics

Comparing current AI valuations to historical technology bubbles reveals concerning parallels:

Cisco (2000 peak):

Market cap: $500 billion

Annual revenue: $18 billion

P/S multiple: 28x

Outcome: Crashed 90%, took 24 years to recover

OpenAI (2025):

Valuation: $500 billion

Projected 2025 revenue: $13 billion

P/S multiple: 38x

Trajectory: Burning $8.5 billion annually with profitability projected for 2029

Anthropic (2025):

Valuation: $183 billion

Projected 2025 revenue: $9 billion

P/S multiple: 20x (more conservative than OpenAI but still aggressive)

The key difference from Cisco: both OpenAI and Anthropic maintain high R&D spending as percentage of revenue ($6.7B for OpenAI's H1 2025), with no clear path to operating leverage or margin expansion.

Investor Risk Profile

From a portfolio perspective:

Highest-certainty plays:

NVIDIA: Monopoly supplier regardless of which model company succeeds; 92% market share, $500B+ valuation justified by growth rates (80%+ YoY)

ASML: Monopoly lithography supplier; essential infrastructure

Cloud infrastructure providers (AWS, Azure, GCP): Benefit from AI compute demand while possessing diversified revenue streams

High-risk plays:

OpenAI, Anthropic: Dependent on maintaining model leadership while competing with well-funded Google and open-source alternatives

Specialized AI applications: Cursor showed exceptional growth (360K paying customers at 36% conversion rate), but scaling from $10B valuation to justify that requires perfect execution

Likely outcomes by 2027:

50-70% valuation correction for all-in AI companies except market leaders

NVIDIA remains dominant but faces potential margin compression as competition increases

Consolidation where 2-3 model leaders acquire dozens of smaller AI companies for talent and technology

Open-source models commoditize certain capabilities, reducing differentiation for frontier models

Conclusion: The Road Ahead

The 2025 AI landscape presents a paradox of extraordinary technological capability combined with organizational incompetence and systemic misunderstanding. Current LLMs are not intelligent in meaningful senses—they match patterns without understanding. They fail reliably when problems change slightly, hallucinate confidently, and reproduce training data artifacts as gospel truth.

Yet the business and venture capital response prices in transformational value. Companies valued at 200x revenue assume AI will generate $10 trillion in economic value by 2035, contradicted by current data showing 95% of enterprise projects failing to deliver value and only 3% of consumers willing to pay for premium access.

The winners appear clear at infrastructure levels: NVIDIA's monopoly in AI chips, ASML's monopoly in chip manufacturing equipment, TSMC's duopoly in advanced fabrication, and cloud providers' oligopoly in compute capacity. These companies win regardless of which AI company achieves dominance because they supply essential ingredients for all competitors.

At the model layer, consolidation will likely occur. Anthropic's 40% enterprise market share and OpenAI's 800M weekly users suggest a two-horse race emerging, with Google climbing steadily as a diversified third competitor. Dozens of well-funded AI startups will merge, be acquired, or collapse as investor expectations shift from growth-at-any-cost to unit economics and profitability.

Consumer AI remains largely a game of trust despite unreliability. Users must internalize that AI serves best as research assistant and content drafter requiring verification—not as reliable advisor, medical provider, legal counsel, or investment specialist. The 3% premium monetization rate suggests most consumers intuitively understand these limitations.

The path forward depends on three factors: whether organizations develop genuine competency in AI deployment (suggested by 95% failure rates, unlikely in short term); whether AI models solve hallucination and reasoning limitations (currently acknowledged as architectural, not solvable); and whether market discipline forces valuations toward fundamentals (historically this takes 3-5 years in technology cycles).

Until then, the AI landscape will likely resemble 2000 internet valuations—spectacular growth stories trading at multiples detached from cash generation, with eventual corrections concentrating value among genuine monopolies and the 1-2 companies that achieve lasting product-market fit.

Ankit is the brains behind bold business roadmaps. He loves turning “half-baked” ideas into fully baked success stories (preferably with extra sprinkles). When he’s not sketching growth plans, you’ll find him trying out quirky coffee shops or quoting lines from 90s sitcoms.

Ankit Dhiman

Head of Strategy

Subscribe to our newsletter

Sign up to get the most recent blog articles in your email every week.